Practical Considerations for your Sub-contracted Process Characterization (PC) Study

Dr. Ian Brown, Senior Director PC & PV Sciences, FUJIFILM Diosynth Biotechnologies, Billingham, UK

Process characterization… an outsourced activity?

Subcontracting a Process Characterization (PC) study can be an expedient and practical undertaking for a biopharmaceutical company. Utilizing the services of an experienced PC team with proven workflows that have been used successfully to support licensure of products will undoubtedly strengthen the robustness of the Chemistry Manufacturing and Controls (CMC) section of any license application and should return its investment, with a smooth transit through the regulatory approval process, avoiding costly delays to the commencement of Commercial manufacturing due to re-work at the ‘reviewer questions’ stage. Outsourcing PC also allows the site undertaking the Process Performance Qualification (PPQ) batches to focus their effort on generating manufacturing documentation and risk assessments to support the validation of the product (e.g., process validation protocols, microbial control strategies, large scale resin / membrane re-use protocols, mixing studies, and process holds).

Outsourcing process development activities is common practice for biopharmaceutical companies, and this is typically performed using well understood tech transfer approaches. Typical transfers comprise of some safety documentation, relating to the cell bank and the molecule alongside communications around the practical execution of the process (e.g., material suppliers, media and buffer recipes, process descriptions and analytical methods). Additionally, for PC programs, it is reasonable to expect that the chosen contract lab will have access to appropriate levels of experience with PC studies including individuals skilled in the application of high-throughput technologies and experienced in modern methods for data analytics. However, to maximize the benefit of outsourcing a PC program, special attention should be paid to some PC specific considerations that will help to ensure that the program runs well at your chosen contractor without a requirement for rework or changes of scope throughout the program. There are three principal areas to consider:

- Feed materials

- Small Scale Model (SSM) and experimental range requirements

- Sample analysis and data interpretation

There are numerous ways to approach the challenges posed by the topics above some of which will carry more residual risk than others; therefore, it is key that the company doing the validation (i.e., the company sub-contracting the PC) is clear on exactly what they require from the subcontracted PC study from the outset.

Feed materials

Supplying feed materials to the Downstream Processing (DSP) part of the process is a critically important consideration for a PC study. To supply a DSP study the feed materials will need to be:

- Representative

- Of sufficient quantity and in relevant volumes to be used in PC

- Of known stability in the intended holding environment

Representative feed material is necessary for the feeds utilized in PC, as there is clearly little insight to be gained through using feed material that does not represent the profile of Critical Quality Attributes (CQAs) that you would expect to be present in the commercial process. Moreover, PC activities are typically ‘modular’ in nature enabling the commencement of parts of a PC study when suitable feed material is available and postponing other parts of the study until such time when representative feed material becomes available. For this reason, most concede that it is sensible to take feed material from a process executed at the intended commercial scale, and thus planning PC activities around full-scale manufacturing batches is the most common approach. However, there may be a variety of reasons why taking feed material from the intended commercial scale is not possible (i.e., manufacturing delays, or product-intermediate stability challenges). The solution is usually to generate material at an ‘intermediate scale’ that can be generated in a shorter time and on a more frequent basis. Therefore, choosing a partner with access to such facilities is an important consideration if supply of stable intermediates from the intended commercial scale is likely to pose a problem. There is of course a level of risk associated with this approach, as the suitability of the Feed materials will need to be demonstrated to show that those derived from the intermediate scale are ‘suitably representative’ of the commercial scale process. In the most elevated risk scenario this demonstration of representativity is likely to rely on equivalence testing alongside commercial scale batches that may not yet have been executed. However, if the process has a history of success at an intermediate-scale and scaling factors are well understood then the use of intermediate scale processing can be an effective way to keep a PC project on track.

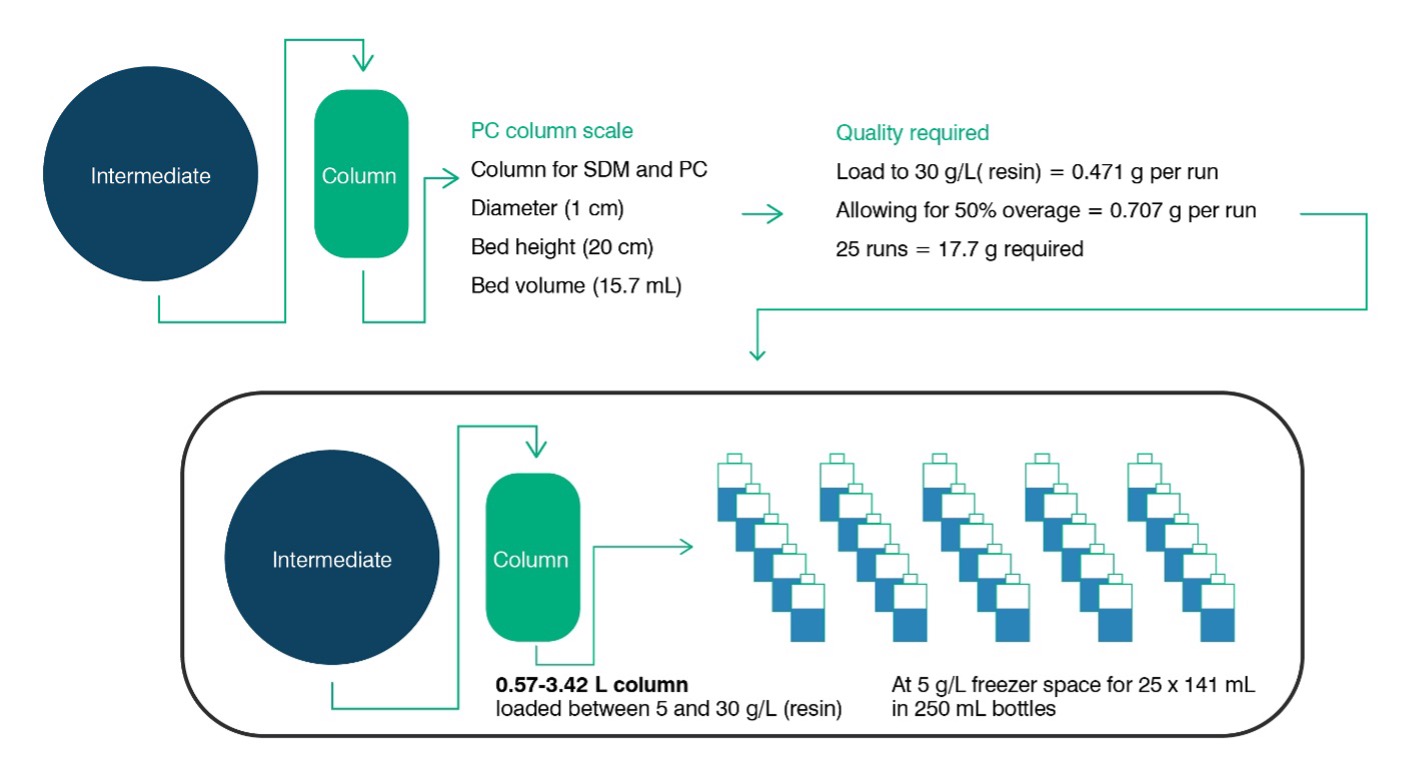

Sufficient volume of feedstock is key to being able to get through the required experiments, and once the size of the intended small-scale model (SSM) is known it is a straightforward exercise to calculate the volume requirements by multiplying by the desired number of experiments (for example: factoring in worst case loadings on chromatography columns). However, deeper consideration in terms of volumes required per experiment, contingency material and overage required per aliquot will be of benefit. To help clients with this challenge, FUJIFILM Diosynth Biotechnologies has tools and expertise available that can assist with a detailed estimate of volume requirements. Figure 1 provides an illustrative example of typical feed material requirements for a single PC study on a chromatography unit operation.

Figure 1: Typical PC Feed material requirements for a chromatography step comprising of 25 runs loaded to 30 g(product)/L(resin) on a 1 cm diameter column with a bed height of 20 cm. (Note: Overage requirements vary depending on specific sampling, line priming and loading strategy).

Unstable feed materials pose an additional challenge to the delivery of PC programs as the conventional workflow of freezing the desired feed material aliquots is disrupted by the requirement for generation of preparative runs made at laboratory scale that can be processed through a downstream operation in short order thereafter. Similarly, there may also be a requirement for lab scale operations to generate feed material in a ‘misrepresentative’ fashion. An example of this could be a case of linkage studies where a unit operation may intentionally be run in a fashion intended to generate higher levels of a particular impurity so that downstream operations can demonstrate redundancy to clear unexpectedly high levels.

Take aways:

- Be aware of the timing of commercial scale batches

- Assess the stability of intermediates

- Consider the relative risk of performing intermediate scale batches for feed material supply

- Verify the capabilities of the contract lab to generate mini-batches and worst-case feed material

Small Scale Model (SSM) and experimental range requirements

To ensure that the data generated during PC is suitable to support the manufacturing process, an assessment of the SSM is expected (1). Typically, this exercise involves comparing process, quality and performance attributes of the designated unit operation executed at small scale (i.e., at the scale that will be used for PC studies) with the same attributes at commercial scale. Methods of comparison can vary, but a statistical demonstration of ‘equivalence’ alongside adherence to pre-defined and statistically justified ranges is the most common approach to assessing and justifying an SSM. Typically, the level of rigor applied to the justification and assessment of an SSM would be based on a considered evaluation of the risk associated with the complexity of the unit operation as well as consideration of any prior knowledge around the execution of the small-scale experiments.

When outsourcing an upstream PC study, it is unlikely that utilizing the manufacturing seed chain is going to be possible due to the different physical locations of the large scale and small-scale operations. Therefore, the approach of running small scale ‘satellite’ production vessels from a manufacturing seed chain will not be feasible. However, it is generally recognized that while a satellite approach does reduce the number of variables by utilizing the same inoculum, it is most often more practical to execute upstream PC studies using a non-satellite approach to remove the interdependence between manufacturing and the PC work. This approach also enables the use of high throughput technologies which will more easily investigate multiple experiments simultaneously, thus generating a superior level of knowledge and understanding to underpin the control strategy. For example, the Sartorius Ambr® 250 can run twenty-four small scale reactors simultaneously, which combined with an appropriate statistical experimental design and at-line analysis will provide a high-level of insight in a short period of time.

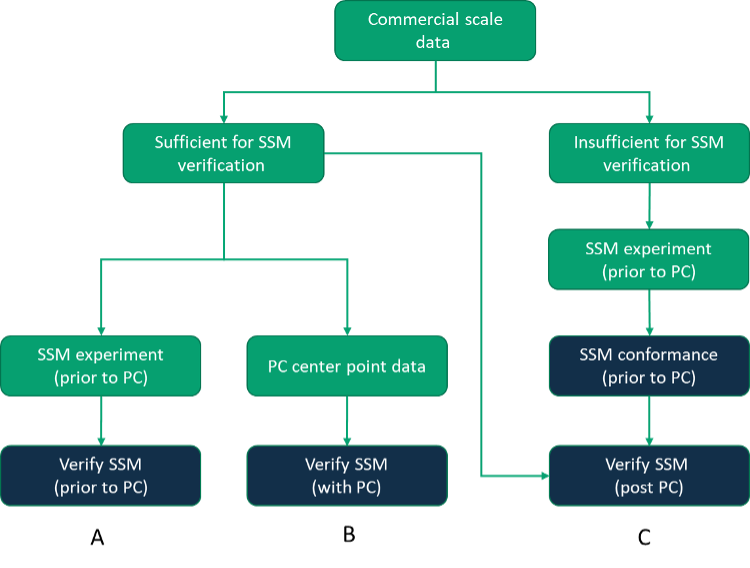

SSM verification is contingent on the presence of sufficient data at commercial scale to enable a valid comparison of commercial scale and small-scale data. Therefore, the approach taken may vary upon the availability of commercial scale data, and Figure 2 illustrates three common approaches to SSM verification. If sufficient commercial scale batches are completed and feed material is available for DSP, a prospective establishment of the SSM is the lowest risk approach as the SSM will be established before PC studies begin (Path A). Alternatively, a faster approach may be to simply gather center point data from process characterization runs for subsequent demonstration of equivalence to commercial scale data (an approach that is particularly applicable to upstream processing where the execution of the SSM is not contingent upon representative Feed material from commercial scale – Path B). However, if insufficient commercial scale data is available, it may also be considered acceptable to perform a basic SSM conformance exercise against some simple criteria assembled based on limited knowledge and understanding prior to supplementing the small-scale data set with a report that considers the SSM more fully against the commercial scale batches once data is available (Path C).

Figure 2: Approaches to SSM verification.

The parameters of interest for PC experimentation should be derived from a completed risk assessment process. The subsequent PC planning exercise may be done immediately after the risk assessment but should be reviewed for compatibility with the sub-contractor’s PC equipment before the commencement of PC activities. A prospective and agreed plan based on a sound risk assessment is especially important in sub-contracted PC activities as additional parameters that emerge after PC experimentation has commenced may necessitate repeat or additional studies which will in-turn be disruptive to timelines.

When assigning study ranges for PC studies, it is important to consider the requirements of the ranges that will be justified. For example, the minimum PC study requirement should be to bracket the range that the manufacturing equipment can deliver to. Working on this basis will ensure that the ranges studied in PC are kept to a minimum and should correspond with the highest level of ‘run success’ in PC studies. However, if ranges ‘wider’ than the capability of current manufacturing equipment are desired (with a view to future proofing the data so that it can be used to support the implementation of the process at other facilities) there is likely to be an increased likelihood of seeing meaningful responses and interactions across the ranges studied due to working closer to process extremities. In summary, PC ranges should be considered based on the commercial supply strategy through PPQ and beyond into routine commercial manufacturing.

Take aways:

- Agree the criteria and strategy for SSM assessment

- Complete a process risk assessment and have a clear view of the PC scope

- Consider and document the ranges that need to be justified to support the ongoing commercial supply of the product

Sample analysis and data interpretation

Interpretation of the relevant responses from both SSM and PC experimentation is important to ensure that correct decisions are made on the control strategy that will ultimately be applied to the Commercial process. This exercise starts with the identification and assignment of CQAs and performance attributes to the relevant unit operations, then continues with a more granular assessment of the same attributes against the relevant processing parameters within the unit operations. Thus, when the PC study is designed the relevant attributes can be assessed for each unit operation. However, any failure in the initial assignment of the CQAs to unit operations may result in oversight of a significant attribute and a failure to test during PC. Detection of such a failure at any point post PC execution will likely result in re-work and potentially costly delays to the ultimate commercialization of product. Furthermore, on a more practical level a list of the potentially impacted attributes enables the assembly of a comprehensive sample plan including:

- Number of samples taken

- Purpose of samples taken

- Storage temperature

- Requirement for sample pre-treatment

- Requirement for outsourcing sample analysis

Ultimately, the data generated will need to support the ranges in the process control strategy and potentially be referenced in the license application. Therefore, it is commensurate with the intended use of the data that a level of ‘assay qualification’ should be performed to ensure that the assays are fit for purpose on the intended intermediates. Although there is no expectation for ‘fully validated’ methods there is an expectation that methods will be scientifically sound to support PC studies, and this is made clear in the FDA document ‘Guidance for Industry Process Validation: General Principles and Practices’ as Section VII, pg. 7, Analytical Methodology states:

‘Process knowledge depends on accurate and precise measuring techniques used to test and examine the quality of drug components, in-process materials, and finished products. Validated analytical methods are not necessarily required during product- and process-development activities or when used in characterization studies. Nevertheless, analytical methods should be scientifically sound (e.g., specific, sensitive, and accurate ) and provide results that are reliable’

Figure 3 illustrates a simple qualification plan for process intermediates on a simple bioprocess

Figure 3: Potential qualification plan for in-process intermediates on a simple bioprocess.

Finally, once the data has been generated there should be a clear understanding with the sub-contractor as to how the data should be presented. Communication of the data could be achieved in many ways, and it makes sense to consider in advance exactly what is required. Options may range from simple communication of the intrinsic facts of the data (e.g., tables of raw data presented in a simple report format) to a full statistical interpretation with a pre agreed methodology and algorithmic approaches incorporated that generate impact ratios.

Take aways:

- Consider the required CQA list to support validation before PC starts (avoids changes to scope and re-work)

- Inclusion of an analytical qualification exercise to support the data generated in PC

- Construct a detailed sample plan and agree a mechanism for communication of the results

- Agree on the level and type of statistical analysis required in advance

Conclusion

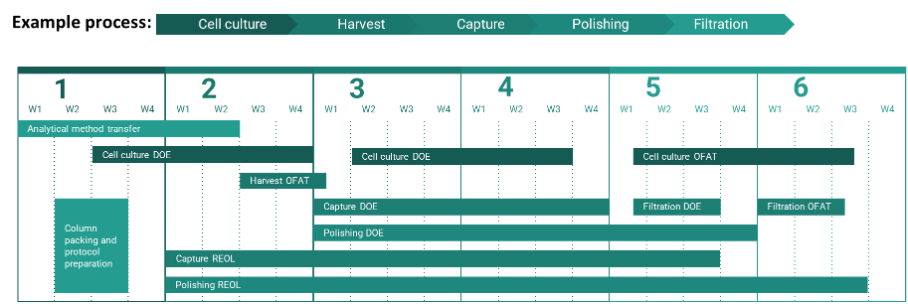

Due to the modular nature of PC work, projects can be completed on accelerated timelines if the correct prerequisites are in place (outlined in this review). Figure 4 shows an example of how PC timelines can be accelerated to enable the completion of a PC study within six months through the application of modular work packages executed in parallel.

Figure 4: Parallel workflows expedite PC timelines.

In summary, access to specialist knowledge, equipment and workflows are the key benefits to sub-contracting a PC study. A robust technology transfer exercise supported by a detailed consideration of the topics highlighted in the review will help to recognize the best value from your outsourced study. Furthermore, working with an experienced partner such as FUJIFILM Diosynth Biotechnologies will enable you to benefit from numerous tools and processes in place to support PC programs and gain advantage from the considerable level of knowledge and experience available to support your product on its journey through a PC workflow to commercialization.

References:

- BioPhorum Operations Group: Justification of Small-Scale Models an Industry Perspective. May 2021

- Food and Drug Administration (FDA). Process validation: general principles and practices. Guidance for Industry. Jan 2011. https://www.fda.gov/media/71021/download

Hear from our team of experts, housed in purpose-built facilities dedicated to PC studies here: PC Video